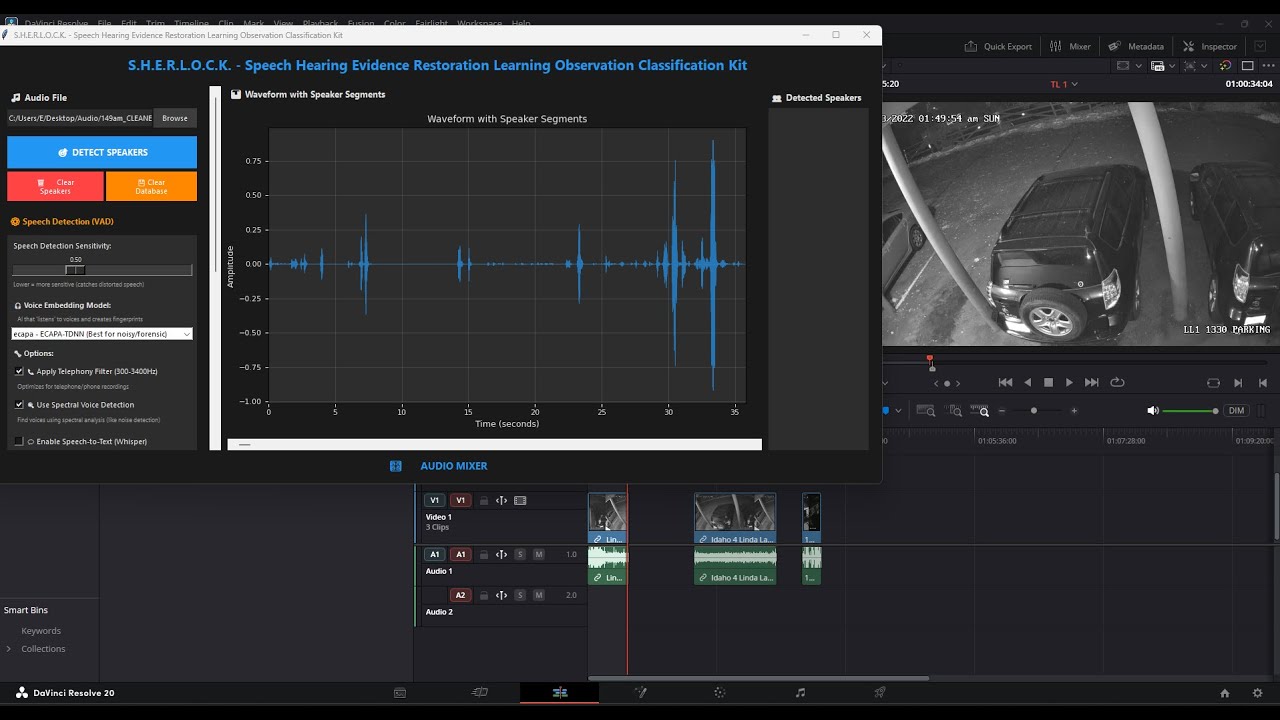

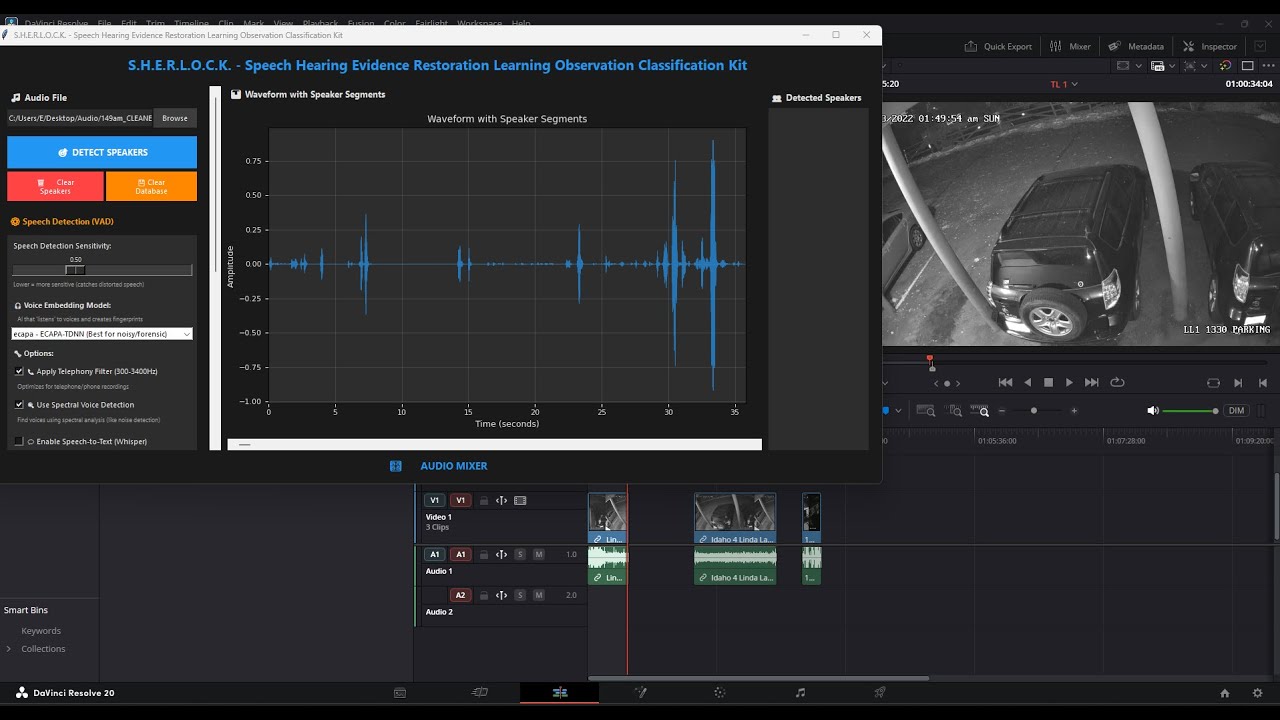

Using the new S.H.E.R.L.O.C.K program to go over the screams at 149 am in the idaho 4 case nobody has wanted to talk about. S.H.E.R.L.O.C.K can help identify speakers and clear up audio files

what is S.H.E.R.L.O.C.K noise remover?

Speech Hearing Evidence Restoration Learning Observation Classification Kit, is part of a larger kit.

S.H.E.R.L.O.C.K noise removal helps make hard-to-hear recordings easier to understand. You give it one file that’s just the annoying background sound, like a hum or static, and another file that has the voice you want to hear. This program does not use artificial intelligence or machine learning; instead, it relies on traditional digital signal processing techniques. The program learns what the bad sound is, then goes through the recording and removes that same sound so the voice stands out more.

This program can be used in court cases because it relies on well-established, non-AI signal-processing methods that are transparent, repeatable, and explainable. This program primarily uses spectral subtraction–based noise reduction, implemented through the noisereduce Python library. It does not invent, guess, or reconstruct audio; instead, it applies fixed mathematical filters and spectral noise reduction techniques to remove background noise that already exists in the recording. The user supplies a separate noise-only sample, which serves as an explicit reference, and the software reduces only those frequencies that statistically match that known noise profile. Because the same input files and settings always produce the same output, the process is reproducible and can be independently verified by another expert using the same parameters. The original audio is never altered or overwritten, preserving the evidentiary chain of custody, while the cleaned file is clearly identifiable as a processed derivative. All processing steps—high-pass filtering, spectral subtraction, stationary noise modeling, and optional multi-pass attenuation—are standard forensic audio techniques that have existed for decades and can be fully described in testimony without relying on proprietary or opaque “black box” AI behavior. This makes the results defensible under evidentiary standards that require transparency, reliability, and the ability for opposing experts to examine and replicate the method

The core noise removal is done using spectral noise reduction via spectral subtraction, implemented through the noisereduce library’s reduce_noise() function. Internally, the audio is split into short overlapping time windows and transformed from the time domain into the frequency domain using a Short-Time Fourier Transform (STFT). For each time–frequency bin, the algorithm compares the energy in the target audio to a noise profile derived from the separate noise-only recording. If the energy in a frequency bin falls within the statistical threshold of the noise profile, that frequency is attenuated according to a user-controlled reduction factor (prop_decrease). When the “stationary noise” option is enabled, the algorithm assumes the noise is consistent over time and applies stationary noise estimation using a standard-deviation–based threshold (n_std_thresh_stationary) rather than adaptive learning. After attenuation, the modified frequency data is reconstructed back into audio using an inverse STFT.

Before noise reduction, the program can optionally apply a 5th-order Butterworth high-pass filter, implemented with scipy.signal.butter() and sosfilt(), which removes low-frequency components such as rumble or handling noise without affecting higher-frequency speech content. This filter is deterministic and linear, meaning it does not adapt or learn — it follows fixed mathematical coefficients. The program also supports multi-pass processing, where the output of one noise-reduction pass is fed back into the same spectral subtraction algorithm again, compounding attenuation in difficult recordings. File input/output and resampling are handled by librosa and soundfile, and the interface itself is built with tkinter, but none of these components involve neural networks, training data, inference, or probabilistic generation. Every step is repeatable and produces the same output given the same inputs and settings

Информация по комментариям в разработке