Discover how to efficiently loop through scraped data and append it to a CSV file using Python in this insightful guide!

---

This video is based on the question https://stackoverflow.com/q/63601890/ asked by the user 'SpingoTakagi' ( https://stackoverflow.com/u/14171470/ ) and on the answer https://stackoverflow.com/a/63603176/ provided by the user 'chitown88' ( https://stackoverflow.com/u/7752296/ ) at 'Stack Overflow' website. Thanks to these great users and Stackexchange community for their contributions.

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: How do I loop and append my code to a CSV file? - Python

Also, Content (except music) licensed under CC BY-SA https://meta.stackexchange.com/help/l...

The original Question post is licensed under the 'CC BY-SA 4.0' ( https://creativecommons.org/licenses/... ) license, and the original Answer post is licensed under the 'CC BY-SA 4.0' ( https://creativecommons.org/licenses/... ) license.

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

How to Loop and Append Data to a CSV File in Python

If you're just starting out with coding, you may bump into some challenges while trying to gather data from the web and store it efficiently. A common task for beginner programmers is taking data from websites and saving it in a CSV format. Recently, a new coder reached out for guidance on this very topic, expressing a struggle with printing multiple lines of data and appending them to a CSV file. In this guide, we will dig into how you can achieve this using Python effectively.

Understanding the Problem

The goal here is to scrape data from a specific URL, which contains information about FIFA World Cup qualifications, and save that information in a well-structured CSV file. You might find yourself stuck if you can print a single line of information but aren’t sure how to loop through the entire dataset.

The Solution Breakdown

Let's break down the solution into clear steps that'll help you grasp the concept easily.

Step 1: Import Libraries

We begin by importing necessary libraries such as urllib, BeautifulSoup, and pandas. Each of these libraries plays a crucial role in web scraping and data manipulation.

[[See Video to Reveal this Text or Code Snippet]]

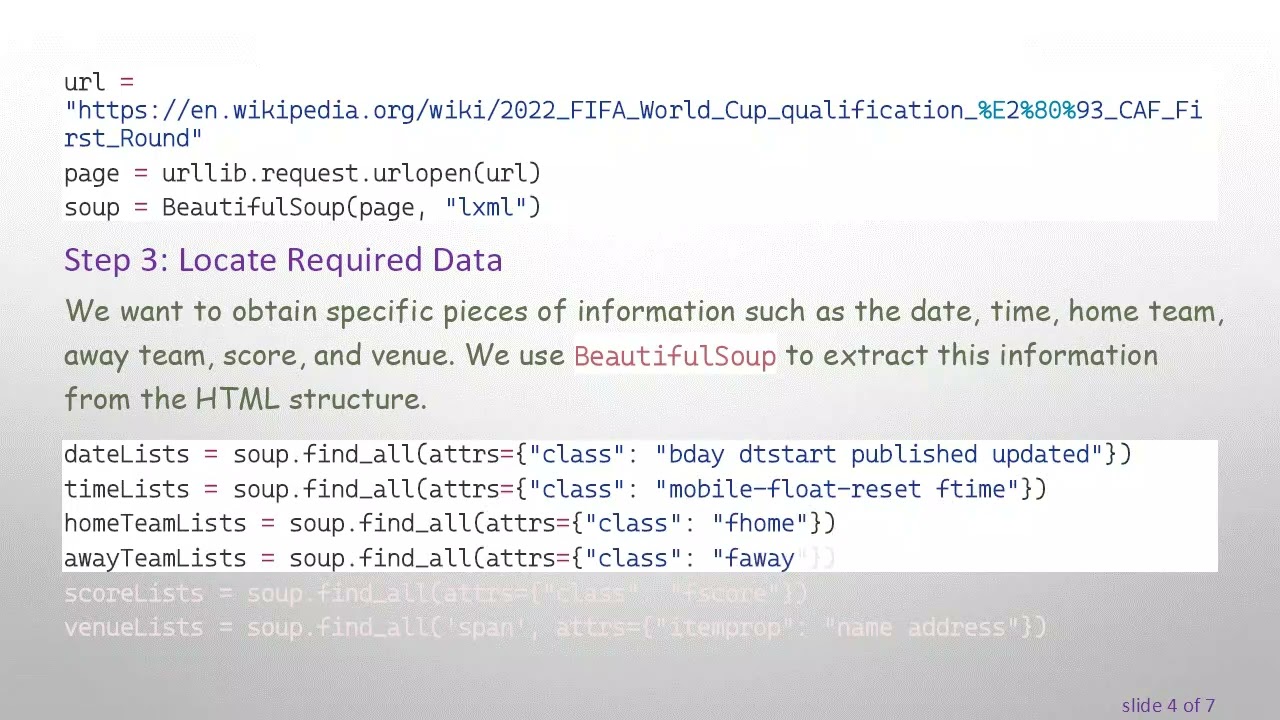

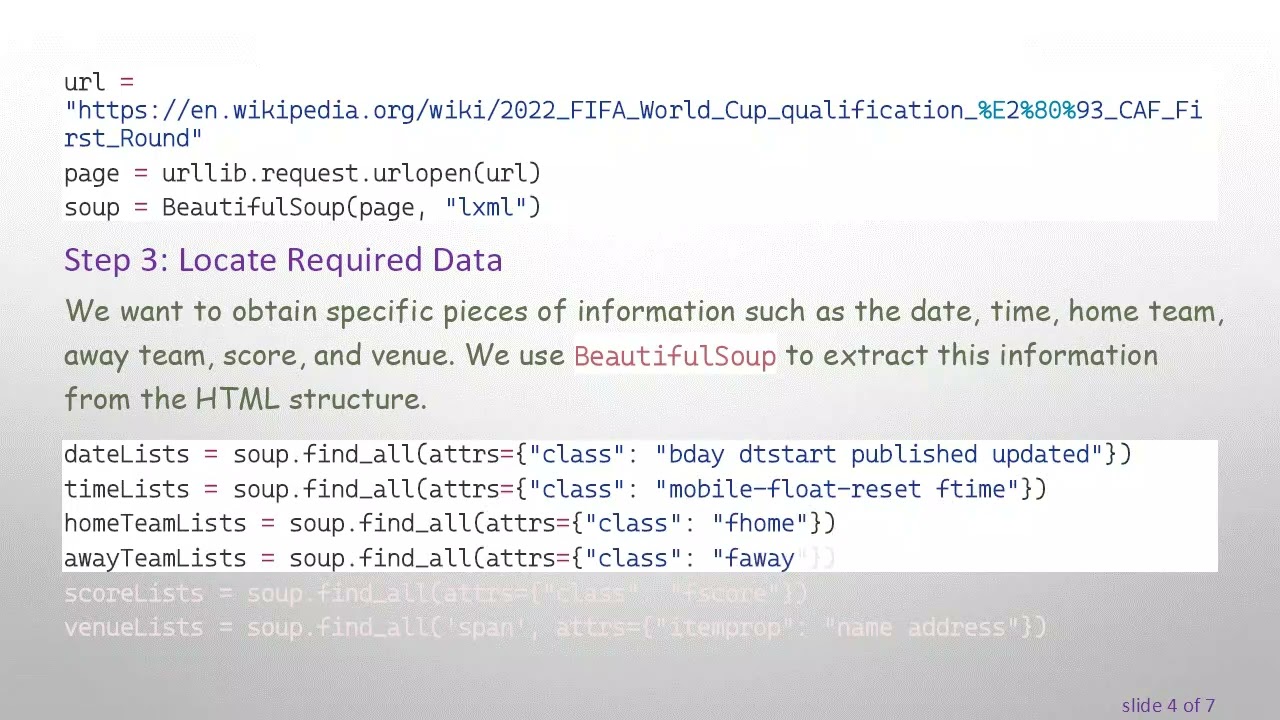

Step 2: Fetch and Parse Web Page

Next, we need to fetch the webpage and parse the HTML content. For this example, we will use the URL specified in the introductory question.

[[See Video to Reveal this Text or Code Snippet]]

Step 3: Locate Required Data

We want to obtain specific pieces of information such as the date, time, home team, away team, score, and venue. We use BeautifulSoup to extract this information from the HTML structure.

[[See Video to Reveal this Text or Code Snippet]]

Step 4: Loop and Store Data

In this step, we'll create lists to hold the data. A for loop is utilized to iterate through the obtained data lists and append their contents to our new lists.

[[See Video to Reveal this Text or Code Snippet]]

Step 5: Create a DataFrame

After we’ve collected all the information, we can create a DataFrame using pandas. This DataFrame allows us to easily manipulate and save the data in CSV format.

[[See Video to Reveal this Text or Code Snippet]]

Step 6: Export to CSV

Finally, let’s export the DataFrame to a CSV file. This is straightforward with pandas as it comes with a built-in function to help with exporting.

[[See Video to Reveal this Text or Code Snippet]]

Conclusion

To summarize, using Python to scrape data from the web and saving it to a CSV file involves fetching the content using urllib, parsing it with BeautifulSoup, iterating through to collect data in lists, and finally creating and exporting a DataFrame with pandas. This structured approach not only simplifies the process but also helps you learn how to harness the power of Python for data manipulation!

Feel free to ask more questions regarding this topic or any other coding challenges you might face. Happy coding!

Информация по комментариям в разработке