Authors:

Narsimlu Kemsaram, James Hardwick, Jincheng Wang, Bonot Gautam, Ceylan Besevli, Giorgos Christopoulos, Sourabh Dogra, Lei Gao, Akin Delibasi, Diego Martinez Plasencia, Orestis Georgiou, Marianna Obrist, Ryuji Hirayama, Sriram Subramanian

Paper: https://doi.org/10.3389/frobt.2025.15...

Abstract:

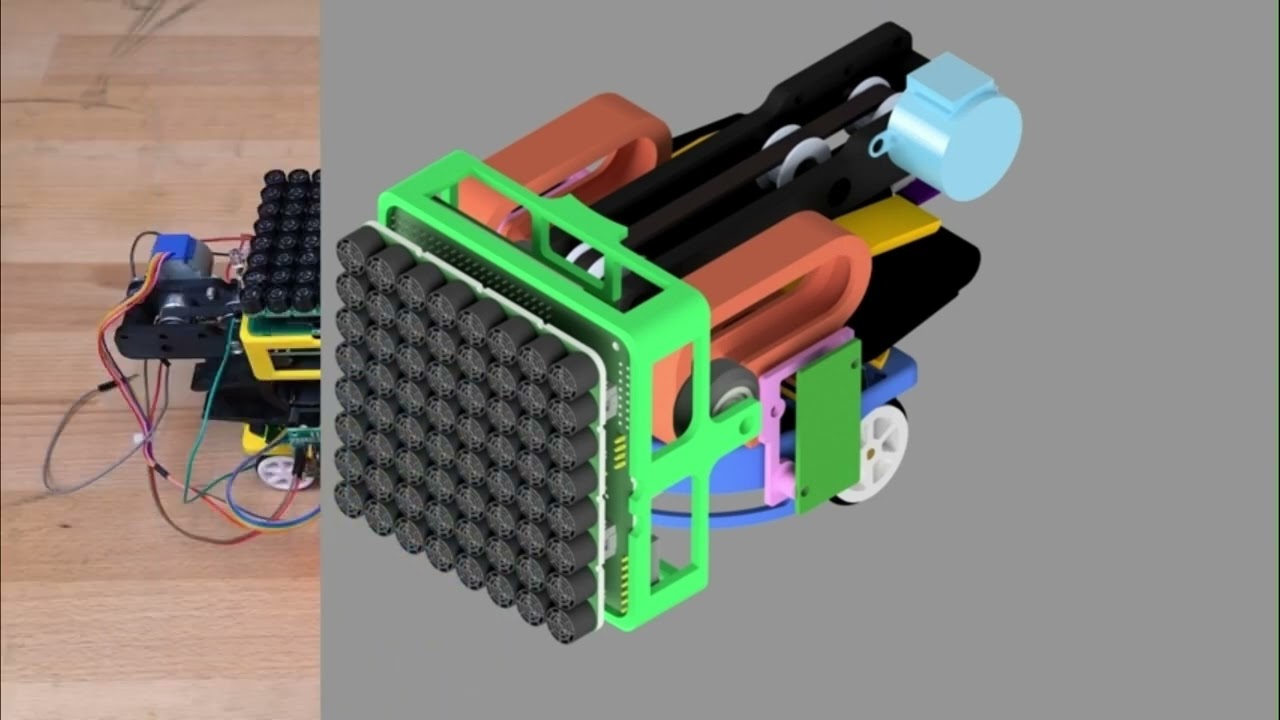

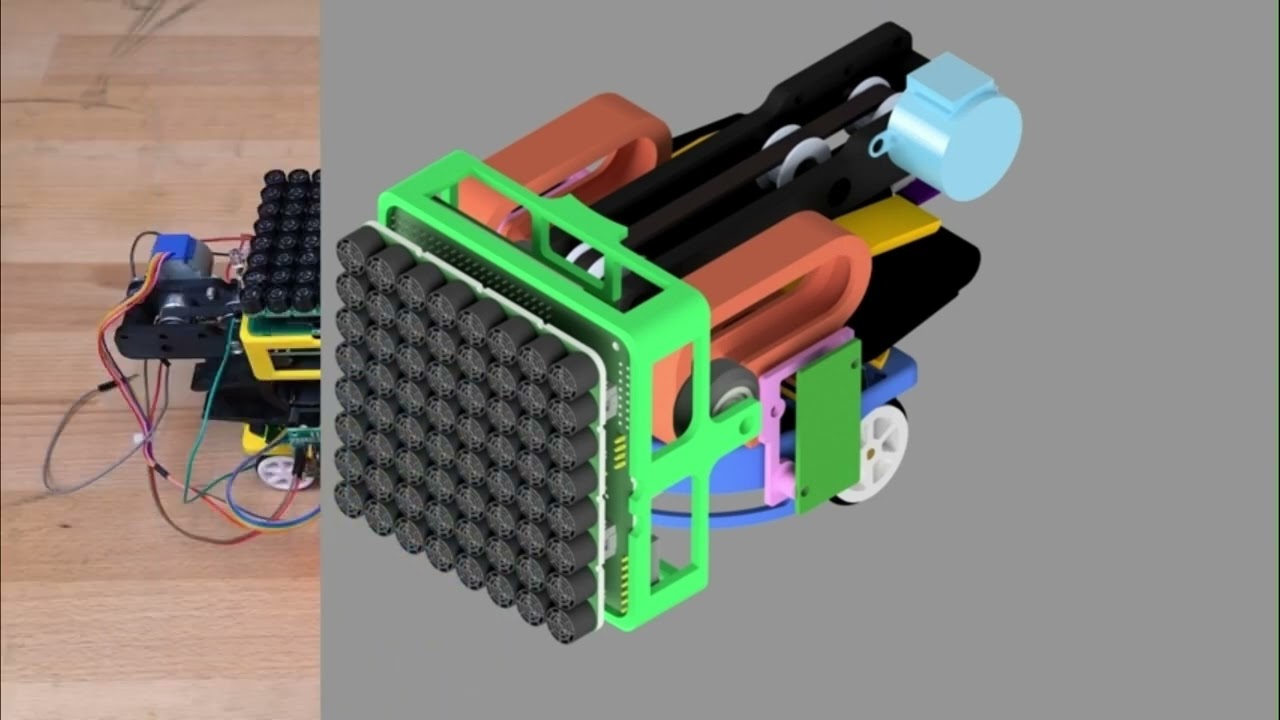

Acoustophoresis has enabled novel interaction capabilities, such as levitation, volumetric displays, mid-air haptic feedback, and directional sound generation, to open new forms of multimodal interactions. However, its traditional implementation as a singular static unit limits its dynamic range and application versatility. This paper introduces “AcoustoBots” — a novel convergence of acoustophoresis with a movable and reconfigurable phased array of transducers for enhanced application versatility. We mount a phased array of transducers on a swarm of robots to harness the benefits of multiple mobile acoustophoretic units. This offers a more flexible and interactive platform that enables a swarm of acoustophoretic multimodal interactions. Our novel AcoustoBots design includes a hinge actuation system that controls the orientation of the mounted phased array of transducers to achieve high flexibility in a swarm of acoustophoretic multimodal interactions. In addition, we designed a BeadDispenserBot that can deliver particles to trapping locations, which automates the acoustic levitation interaction. These attributes allow AcoustoBots to independently work for a common cause and interchange between modalities, allowing for novel augmentations (e.g., a swarm of haptics, audio, and levitation) and bilateral interactions with users in an expanded interaction area. We detail our design considerations, challenges, and methodological approach to extend acoustophoretic central control in distributed settings. This work demonstrates a scalable acoustic control framework with two mobile robots, laying the groundwork for future deployment in larger robotic swarms. Finally, we characterize the performance of our AcoustoBots and explore the potential interactive scenarios they can enable.

Acknowledgements:

This work was supported by the EPSRC Prosperity partnership program - Swarm Spatial Sound Modulators (EP/V037846/1), by the Royal Academy of Engineering through their Chairs in Emerging Technology Program (CIET 17/18), EU H2020 research and innovation project Touchless (101017746), and by the UKRI Frontier Research Guarantee Grant (EP/X019519/1). The authors thank Ana Marques for her support in creating the images and videos, and James Hardwick for his voice-over for the accompanying video.

Информация по комментариям в разработке