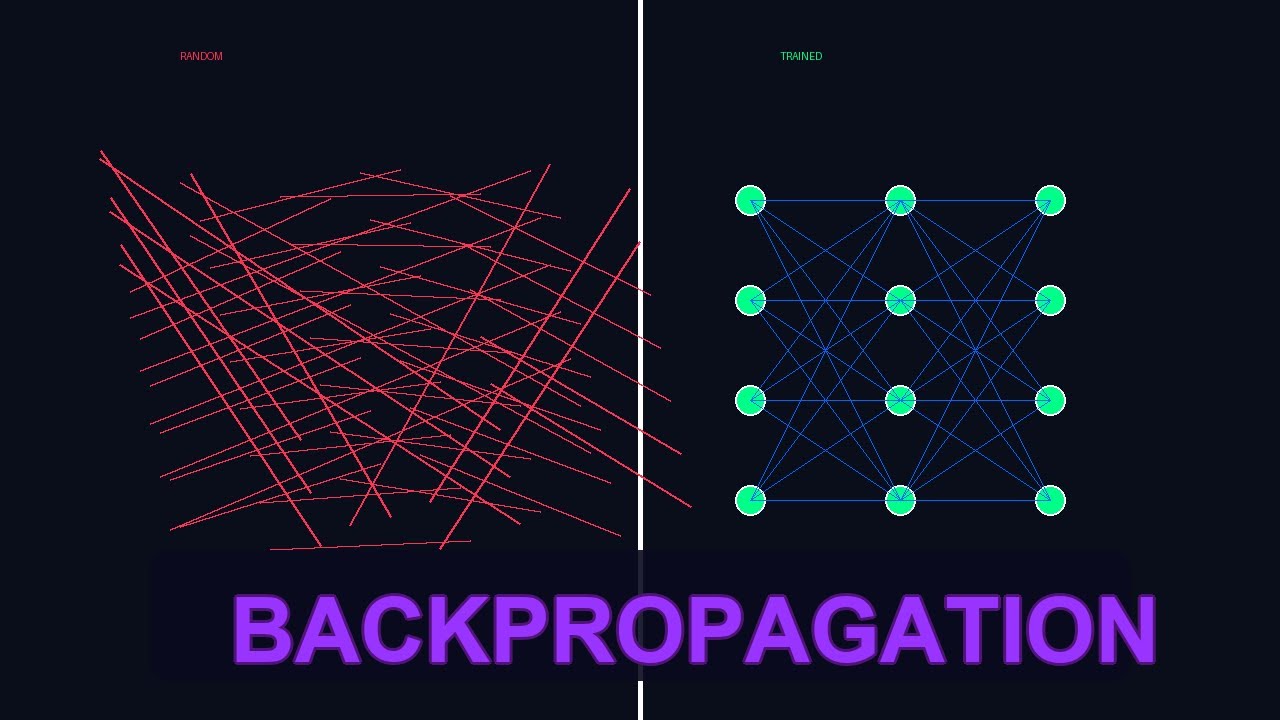

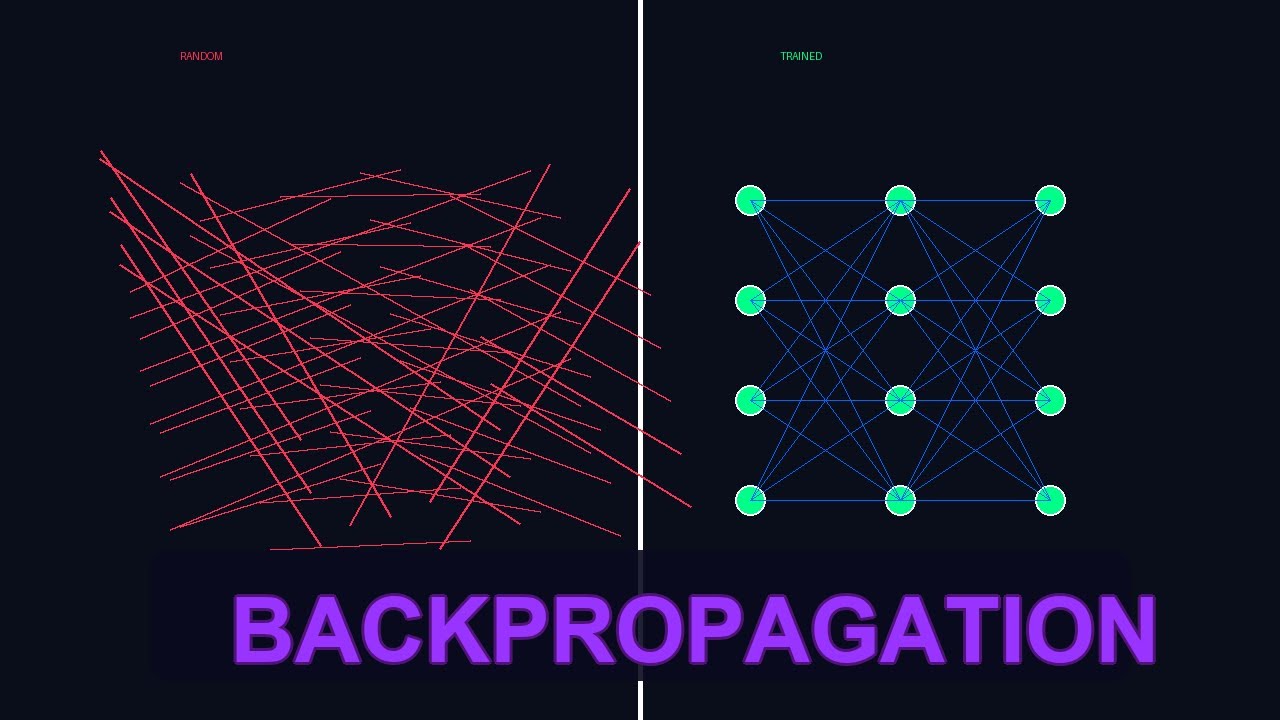

The 1986 Algorithm That Powers ALL Modern AI (Backpropagation Explained)

Every AI system you use - ChatGPT, DALL-E, self-driving cars, medical diagnosis - relies on ONE mathematical breakthrough from 1986: backpropagation. Without it, neural networks can't learn. With it, we get the AI revolution.

In this 10-minute deep dive, I'll show you EXACTLY how backpropagation works, from absolute zero to complete understanding. No prerequisites needed - just clear explanations with professional visuals.

⏱️ TIMESTAMPS:

00:00 - The Secret Behind All AI

01:07 - The Problem: Why Networks Need to Learn

02:27 - Forward Pass: How Networks Make Predictions

04:21 - Loss Function: Measuring Error

06:17 - BACKPROPAGATION: The Chain Rule in Action

08:46 - Gradient Descent: Actually Learning

10:00 - Real-World Example: Cats vs Dogs

10:22 - Conclusion & Recap

🎓 WHAT YOU'LL LEARN:

✅ What backpropagation is and why it matters

✅ How the forward pass generates predictions

✅ What loss functions measure and why

✅ How the chain rule enables gradient calculation

✅ How gradient descent optimizes neural networks

✅ Real training example with 25 million parameters

✅ Why this 1986 algorithm still powers 2026 AI

🔑 KEY CONCEPTS EXPLAINED:

• Neural network architecture

• Weights and biases

• Activation functions (ReLU, Sigmoid)

• Loss functions (Cross-Entropy, MSE)

• Gradients and the chain rule

• Training loops and convergence

📊 REAL NUMBERS:

• GPT-3: 175 BILLION parameters

• ResNet-50: 25 million parameters

• MNIST accuracy: 98% after training

• Training iterations: millions

🎯 WHO IS THIS FOR:

Perfect for students, developers, data scientists, and anyone curious about how AI actually works. Whether you're studying machine learning, building neural networks, or just fascinated by AI, this video breaks down the math into clear, visual explanations.

🔬 TECHNICAL DEPTH:

This is a DEEP DIVE - not surface-level. We cover:

Mathematical foundations (calculus, chain rule)

Network architectures (input, hidden, output layers)

Training pipelines (forward pass, backprop, update)

Optimization techniques (learning rates, momentum)

Real-world applications (vision, NLP, self-driving)

📚 RESOURCES & FURTHER LEARNING:

• Original Backprop Paper (1986): Rumelhart, Hinton, Williams

• Deep Learning Book: Ian Goodfellow

• Neural Networks Course: Andrew Ng (Coursera)

• PyTorch/TensorFlow: Implement your own networks

🚀 APPLICATIONS POWERED BY BACKPROPAGATION:

• ChatGPT / GPT-4 (language models)

• DALL-E / Stable Diffusion (image generation)

• Tesla Autopilot (self-driving cars)

• AlphaGo / AlphaFold (game AI, protein folding)

• Medical diagnosis systems (radiology, pathology)

• Voice assistants (Siri, Alexa, Google Assistant)

💡 WHY THIS MATTERS:

Understanding backpropagation is THE key to understanding modern AI. It's the difference between using AI as a black box and actually knowing how it works. Whether you're debugging neural networks, optimizing training pipelines, or just trying to understand the AI revolution, this video gives you the foundational knowledge.

🔔 SUBSCRIBE for more AI deep dives:

Next videos: Transformers explained, CNNs from scratch, RNNs & LSTMs

💬 COMMENT YOUR QUESTIONS:

What AI topic should I explain next? Drop suggestions below!

🏷️ TAGS:

#backpropagation #neuralnetworks #deeplearning #machinelearning #ai #gradientdescent #artificialintelligence #datascience #python #tensorflow #pytorch #computerscience #stem #education #tutorial

---

📧 CONTACT & COLLABORATION:

For sponsorships, collaborations, or business inquiries: [email protected]

🙏 CREDITS:

• Animation: Manim Community Edition

• Research: Stanford CS231n, MIT 6.S191

---

⚖️ DISCLAIMER:

This video is for educational purposes. All concepts explained are based on published research and widely-accepted machine learning principles.

---

© 2026 @DuniyaDrift All rights reserved.

Информация по комментариям в разработке