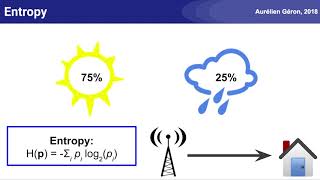

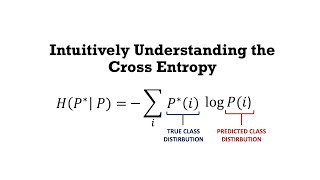

Entropy | Cross Entropy | KL Divergence | Quick Explained

Скачать Entropy | Cross Entropy | KL Divergence | Quick Explained бесплатно в качестве 4к (2к / 1080p)

У нас вы можете скачать бесплатно Entropy | Cross Entropy | KL Divergence | Quick Explained или посмотреть видео с ютуба в максимальном доступном качестве.

Для скачивания выберите вариант из формы ниже:

Cкачать музыку Entropy | Cross Entropy | KL Divergence | Quick Explained бесплатно в формате MP3:

Если иконки загрузки не отобразились, ПОЖАЛУЙСТА,

НАЖМИТЕ ЗДЕСЬ или обновите страницу

Если у вас возникли трудности с загрузкой, пожалуйста, свяжитесь с нами по контактам, указанным

в нижней части страницы.

Спасибо за использование сервиса video2dn.com

![[딥러닝] 4강. 정보 이론 기초 15분 컷! (엔트로피, 크로스-엔트로피, KL-divergence, Mutual information)](https://i.ytimg.com/vi/z1k8HVU4Mxc/mqdefault.jpg)

Информация по комментариям в разработке