Demonstrate - Search - Predict Model (DSP) by Stanford Univ.

DSP can express high-level programs that bootstrap pipeline-aware demonstrations, search for relevant passages, and generate grounded predictions, systematically breaking down problems into small transformations that the LM and RM can handle more reliably.

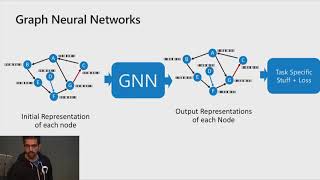

The discussed content revolves around the development and intricacies of a complex Large Language Model (LLM) system integrated with various data models and a self-programmable, intelligent pipeline structure. Initially, the LLM is tasked with decomposing a complex task into simpler subtasks, each represented by nodes in a graph structure. These nodes specialize in specific functions such as information retrieval, response generation, or response optimization. The unique aspect here is the transformation of these nodes into intelligent, task-specific modules within a graph, enabling sophisticated data processing and task execution. The edges in this graph represent data flow, which, due to the system's advanced design, are self-programmable and intelligent, enhancing the overall efficiency and adaptability of the system.

The evolution from a langchain to a langgraph approach is highlighted, where the process of creating and defining a graph involves specifying state, agent nodes, and edge logic. This approach draws a parallel with the user interface of NetworkX, emphasizing ease of use in constructing and running graphs. The system's capability extends to self-configurable, self-learning, and self-optimizing pipelines, utilizing graph theory for a more sophisticated, mathematical representation of the pipeline connections between different modules like language models and retrieval models. Tools like GraphSAGE and GraphBERT are mentioned for neural network programming, alongside PyG (PyTorch Geometric) for handling convolutional structures and node classification.

A year ago, Stanford University pioneered the integration of frozen language models with retrieval models for complex NLP tasks through in-context learning, without the need to fine-tune these models. This approach, known as retrieval augmented in-context learning (RAICL), leverages the language model's generative capabilities to create additional synthetic training data on the fly, within the required knowledge domain, enabling the system to continuously learn and adapt. The DSP (Demonstrate, Search, Predict) methodology plays a crucial role in this process, where the demonstrate stage involves the language model creating examples to guide its understanding of tasks, the search stage involves retrieving relevant data, and the predict stage synthesizes this data into coherent responses. The system's architecture allows for multi-hop searches and reasoning, enhancing its ability to handle complex queries and improve over time through self-optimization. This advanced methodology from Stanford signifies a major leap in AI, transforming rigid template-based systems into dynamic, self-improving, graph-based architectures.

#airesearch

#newtechnology

![[1hr Talk] Intro to Large Language Models](https://i.ytimg.com/vi/zjkBMFhNj_g/mqdefault.jpg)

Информация по комментариям в разработке