Understanding Logistic Regression: Differences from Linear Regression and Decision Tree Explained📊

[00:02]( • Understanding Logistic Regression: Differe... ) Logistic regression predicts binary outcomes using categorical dependent variables.

It models the probability of success or failure, akin to a coin toss.

The dependent variable is categorical, resulting in outputs of 0 or 1.

[00:35]( • Understanding Logistic Regression: Differe... ) The sigmoid function models probabilities in logistic regression.

The sigmoid function outputs values between 0 and 1, representing predicted probabilities.

As the input (z) approaches infinity, the predicted value approaches 1, and as it approaches negative infinity, it approaches 0.

[01:09]( • Understanding Logistic Regression: Differe... ) Logistic regression estimates probabilities for binary outcomes.

The value of z (the hypothesis) indicates classification confidence, where z=0 leads to a 50% probability.

Unlike linear regression, logistic regression outputs probabilities bound between 0 and 1, suitable for binary classification.

[01:37]( • Understanding Logistic Regression: Differe... ) Logistic regression is for binary outcomes, unlike linear regression for continuous values.

Logistic regression predicts binary dependent variables, while linear regression predicts continuous ones.

Linear regression necessitates a linear relationship between variables, unlike logistic regression.

[02:06]( • Understanding Logistic Regression: Differe... ) Logistic regression predicts tumor types based on size without correlated variables.

In logistic regression, independent variables must be uncorrelated to ensure accurate predictions.

The model uses a sigmoid function to categorize tumor size, predicting malignancy if the output exceeds 0.5.

[02:37]( • Understanding Logistic Regression: Differe... ) Linear regression struggles with tumor size predictions.

Predictions hinge on thresholds, affecting accuracy for varying tumor sizes.

Adjusting thresholds for linear regression complicates achieving precise classifications.

[03:06]( • Understanding Logistic Regression: Differe... ) Decision trees are supervised learning algorithms for classification and regression.

Decision trees handle both continuous and categorical input data, offering versatility in problem-solving.

They use an 'if-then' structure to partition the data into subsets based on feature values, starting from a root node.

*Logistic Regression Basics*

Logistic regression is used for binary dependent variables, represented as 0 (no) and 1 (yes).

It estimates the probability of an event occurring, such as success or failure, making it suitable for situations like a coin toss.

The logistic function transforms the linear combination of inputs into a probability score between 0 and 1.

*Key Differences: Logistic vs. Linear Regression*

Logistic regression is applicable when the dependent variable is binary, while linear regression is for continuous dependent variables.

In linear regression, a linear relationship must exist among variables, whereas logistic regression does not require this assumption.

Linear regression outputs a continuous value, while logistic regression outputs a probability, which can be interpreted as class memberships.

*Limitations of Linear Regression for Classification*

Linear regression can produce unbounded predictions, which are not suitable for binary classifications.

For example, using linear regression to predict tumor malignancy can lead to incorrect classifications if the range of tumor sizes varies significantly.

A threshold for classification in linear regression may need frequent adjustments, reducing its effectiveness for binary outcomes.

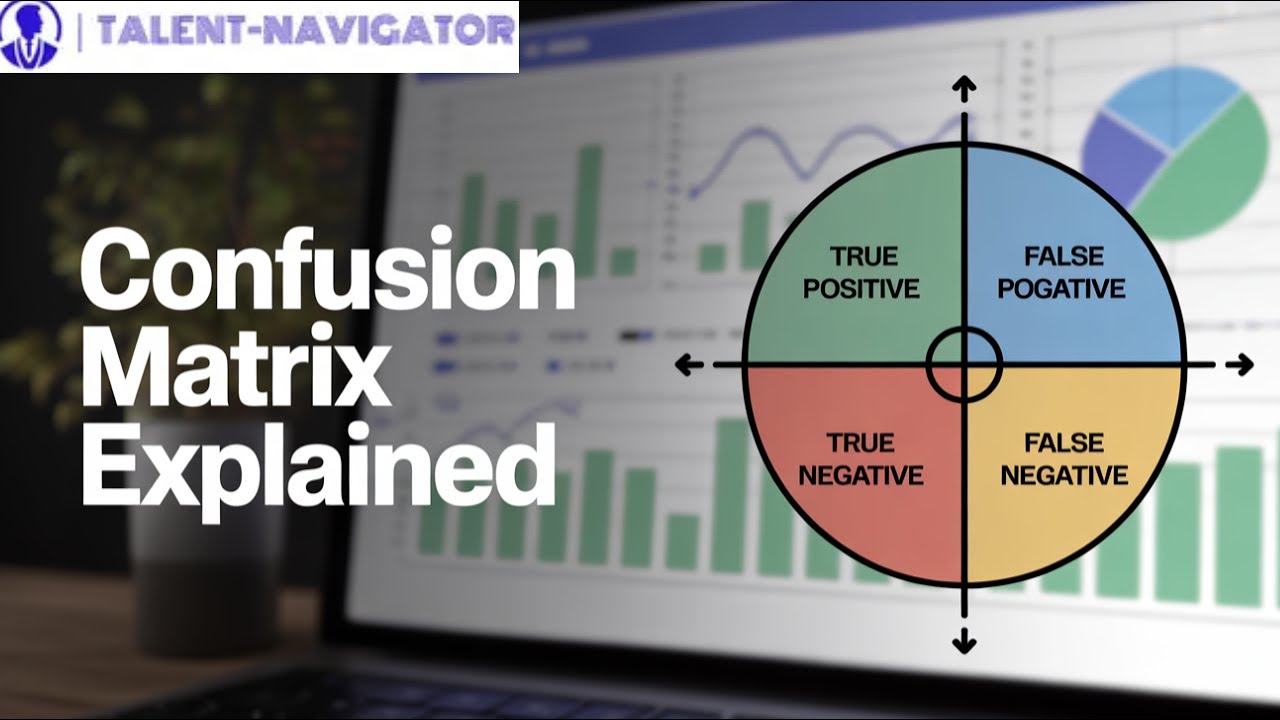

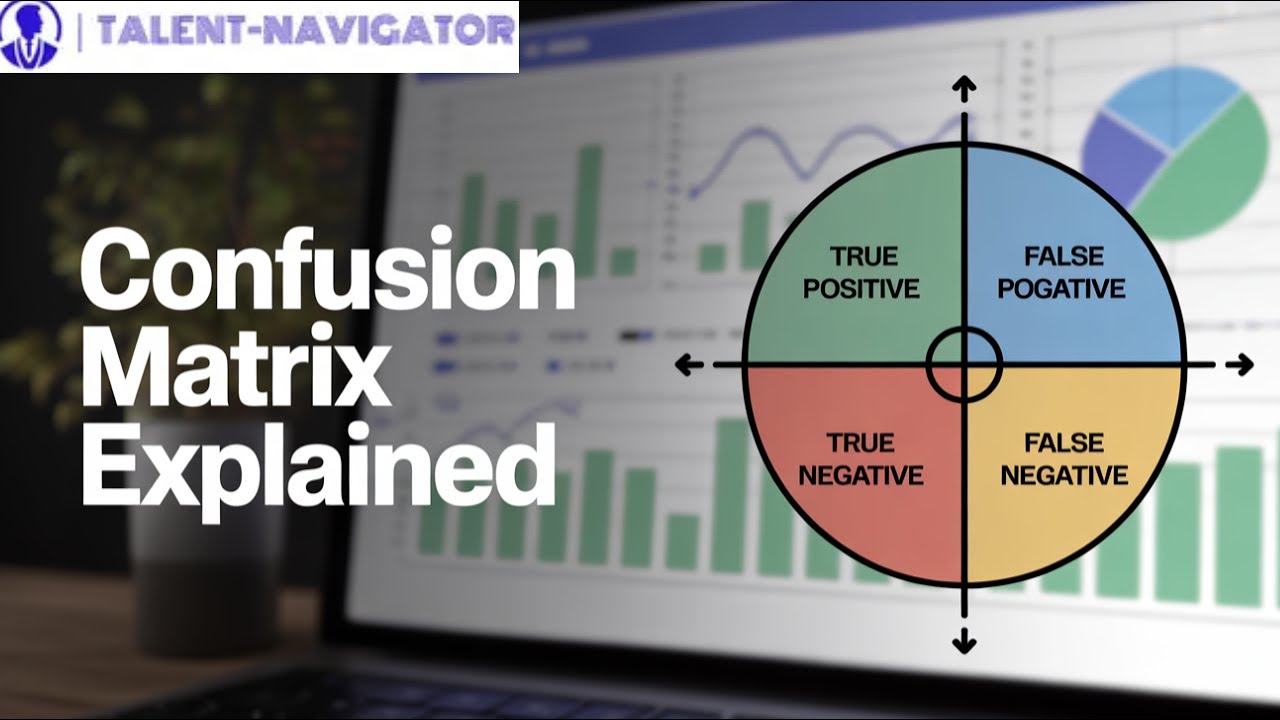

*Understanding Decision Trees*

A decision tree is a supervised learning algorithm used for both classification and regression tasks, accommodating both continuous and categorical inputs.

It operates on an "if-then" logic, structuring decisions through nodes (representing features) and leaves (representing outcomes).

The initial training set is treated as the root, and the tree is built recursively based on statistical analysis of attribute values.

*Structure of Decision Trees*

The root node represents the entire dataset, with branches leading to internal nodes based on feature values.

Internal nodes can represent categorical features, while continuous features are discretized for better decision-making.

Visual representation helps in understanding the flow from the root through several decisions to the final outcomes, facilitating clearer predictions.

Информация по комментариям в разработке